Reference: https://d365byjp.blogspot.com/2018/03/setup-sync-for-new-custom-table-from-ax.html

The post shows how to create a new custom table across both AX and the channel and setup sync(CDX) in Dynamics 365 Retail.

Changes are in AX tables, CDX, Channel DB

Setup steps:-

1. AX Customization:

- Create a new Table called ISVRetailStoreHoursTable

- Enum field: Day, enum type: WeekDays, mandatory,

- Int field: OpenTime, extended data type: TimeOfDay, mandatory,

- Int field: ClosingTime, extended data type: TimeOfDay, mandatory,

- Int64 field: RetailStoreTable, extended data type: RefRecId, mandatory

- Create a Foreign Key Relation to RetailStoreTable

- Name: RetailStoreTable

- Cardinality: ZeroOne

- RelatedTable: RetailStoreTable

- Related table cardinality: ExactlyOne

- Relationship type: Association

- Contraint: Normal, name: RetailStoreTable, Field: RetailStoreTable, Related field: RecId

- Populate some data by running below job or manually enter some data.

class Temp_InsertData

{

/// <summary>

/// Runs the class with the specified arguments.

/// </summary>

/// <param name = "_args">The specified arguments.</param>

public static void main(Args _args)

{

ISVRetailStoreHoursTable storeDayHoursTable;

RetailStoreTable storeTable;

Int64 numberOfDeletedRows;

// insert data for houston

select * from storeTable

where storeTable.StoreNumber == "HOUSTON";

print storeTable.Recid;

ttsBegin;

delete_from storeDayHoursTable

where storeDayHoursTable.RetailStoreTable == storeTable.RecId;

numberOfDeletedRows = storeDayHoursTable.RowCount();

print numberOfDeletedRows;

// yyyy-mm-ddThh:mm:ss

storeDayHoursTable.RetailStoreTable = storeTable.RecId;

storeDayHoursTable.Day = WeekDays::Monday;

storeDayHoursTable.OpenTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T08:00:00"));

storeDayHoursTable.ClosingTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T20:00:00"));

storeDayHoursTable.insert();

storeDayHoursTable.RetailStoreTable = storeTable.RecId;

storeDayHoursTable.Day = WeekDays::Tuesday;

storeDayHoursTable.OpenTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T08:00:00"));

storeDayHoursTable.ClosingTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T20:00:00"));

storeDayHoursTable.insert();

storeDayHoursTable.RetailStoreTable = storeTable.RecId;

storeDayHoursTable.Day = WeekDays::Wednesday;

storeDayHoursTable.OpenTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T11:00:00"));

storeDayHoursTable.ClosingTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T21:00:00"));

storeDayHoursTable.insert();

storeDayHoursTable.RetailStoreTable = storeTable.RecId;

storeDayHoursTable.Day = WeekDays::Thursday;

storeDayHoursTable.OpenTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T09:00:00"));

storeDayHoursTable.ClosingTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T20:00:00"));

storeDayHoursTable.insert();

storeDayHoursTable.RetailStoreTable = storeTable.RecId;

storeDayHoursTable.Day = WeekDays::Friday;

storeDayHoursTable.OpenTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T08:00:00"));

storeDayHoursTable.ClosingTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T20:00:00"));

storeDayHoursTable.insert();

storeDayHoursTable.RetailStoreTable = storeTable.RecId;

storeDayHoursTable.Day = WeekDays::Saturday;

storeDayHoursTable.OpenTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T10:00:00"));

storeDayHoursTable.ClosingTime = DateTimeUtil::time(DateTimeUtil::parse("2015-05-18T18:00:00"));

storeDayHoursTable.insert();

ttsCommit;

}

}

2. CDX CHANGE:-

- - Create a new resource file with all job, sub jobs and table information as below and save it as ISVRetailStoreHoursTable.xml file.

<RetailCdxSeedData ChannelDBMajorVersion="7" ChannelDBSchema="ext" Name="AX7">

<Jobs>

<!-- <Job DescriptionLabelId="REX4520710" Description="Custom job" Id="1070"/> -->

</Jobs>

<Subjobs>

<Subjob Id="ISVRetailStoreHoursTable" TargetTableSchema="ext" AxTableName="ISVRetailStoreHoursTable">

<ScheduledByJobs>

<ScheduledByJob>1070</ScheduledByJob>

</ScheduledByJobs>

<AxFields>

<Field Name="Day"/>

<Field Name="RetailStoreTable"/>

<Field Name="OpenTime"/>

<Field Name="ClosingTime"/>

<Field Name="RecId"/>

</AxFields>

</Subjob>

</Subjobs>

</RetailCdxSeedData>

- Right-click the project, and then select Add > New Item

- In the Add New item dialog box, select Resources, name the resource file RetailCDXSeedDataAX7_Demo, and then select Add.

- In the Select a Resource file dialog box, find the resource file that you created in step 2, and then select Open.

- Add a new class that should be used to handle the registerCDXSeedDataExtension event. Search for the RetailCDXSeedDataBase class in AOT, and then open it in the designer. Right-click the registerCDXSeedDataExtension delegate, and then select Copy event handler.

- Go to the event handler class that you created, and paste the following event handler code into it.

class RetailCDXSeedDataAX7EventHandler

{

/// <summary>

///

/// </summary>

/// <param name="originalCDXSeedDataResource"></param>

/// <param name="resources"></param>

[SubscribesTo(classStr(RetailCDXSeedDataBase), delegateStr(RetailCDXSeedDataBase, registerCDXSeedDataExtension))]

public static void RetailCDXSeedDataBase_registerCDXSeedDataExtension(str originalCDXSeedDataResource, List resources)

{

if (originalCDXSeedDataResource == resourceStr(RetailCDXSeedDataAX7))

{

resources.addEnd(resourceStr(RetailCDXSeedDataAX7_Demo));

}

}

}

- Whenever the Retail initialization class runs, it looks for any extension that implements this handler. If an extension is found, the runtime will also initialize the custom information that is found in the resource file.

- Before you add your custom resource to the list, you must verify that the originalCDXSeedDataResource resource that is being processed is RetailCDXSeedDataAX7. Otherwise, you might cause unintended results.

- Navigate to Retail > Headquarters setup > Retail scheduler and click on Initialize retail scheduler.

- In the dialog box that appears, select Delete existing configuration.

- Select OK to start the initialization.

- When the initialization is completed, the CDX scheduler jobs, subjob definitions, and distribution schedules are updated by using the original RetailCDXSeedDataAX7 resource and the customized RetailCDXSeedDataAX7_Demo resource.

3. Verify Changes:

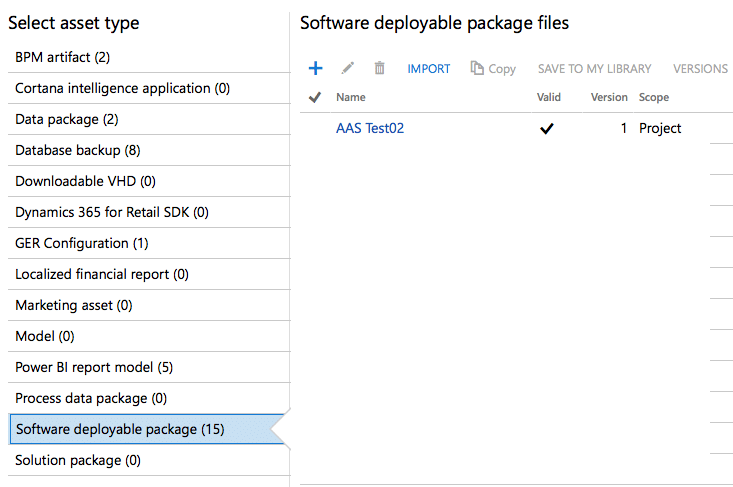

- Navigate to Retail > Headquarters setup > Retail scheduler > Scheduler subjobs , here we can see ISVRetailStoreHoursTable is added under Scheduler subjobs and scheduled by 1070 as specified in resource file.

4. Channel DB:

- Run the below query to create table in Channel database, here we should create custom tables in ext schema as we are not allowed to override the standard.

- The advantage of using ext schema , while DB upgrade we will not face any conflict .

-- Create the extension table to store the custom fields.

IF (SELECT OBJECT_ID('[ext].[ISVRETAILSTOREHOURSTABLE]')) IS NULL

BEGIN

CREATE TABLE [ext].[ISVRETAILSTOREHOURSTABLE](

[RECID] [bigint] NOT NULL,

[DAY] [int] NOT NULL DEFAULT ((0)),

[OPENTIME] [int] NOT NULL DEFAULT ((0)),

[CLOSINGTIME] [int] NOT NULL DEFAULT ((0)),

[RETAILSTORETABLE] [bigint] NOT NULL DEFAULT ((0)),

CONSTRAINT [I_ISVRETAILSTOREHOURSTABLE_RECID] PRIMARY KEY CLUSTERED

(

[RECID] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

ALTER TABLE [ext].[ISVRETAILSTOREHOURSTABLE] WITH CHECK ADD CHECK (([RECID]<>(0)))

END

GO

GRANT SELECT, INSERT, UPDATE, DELETE ON OBJECT::[ext].[ISVRETAILSTOREHOURSTABLE] TO [DataSyncUsersRole]

- Create view for the table we created that joins the original table on the channel side and the extension table on the channel side is created. This view is required so that CDX can correctly download and push the records from Retail HQ tables to the channel extension table back.

-- Create the customer extension view that is accessed by CRT to query the custom fields.

IF (SELECT OBJECT_ID('[ext].[ISVRETAILSTOREHOURSVIEW]')) IS NOT NULL

DROP VIEW [ext].[ISVRETAILSTOREHOURSVIEW]

GO

CREATE VIEW [ext].[ISVRETAILSTOREHOURSVIEW] AS

(

SELECT

sdht.DAY,

sdht.OPENTIME,

sdht.CLOSINGTIME,

sdht.RECID,

rst.STORENUMBER

FROM [ext].[ISVRETAILSTOREHOURSTABLE] sdht

INNER JOIN [ax].RETAILSTORETABLE rst ON rst.RECID = sdht.RETAILSTORETABLE

)

GO

GRANT SELECT ON OBJECT::[ext].[ISVRETAILSTOREHOURSVIEW] TO [UsersRole];

GO

5. Verify CDX:

- run 1070 job full sync (channel data group)

- check Download sessions and channel DB that the data arrived